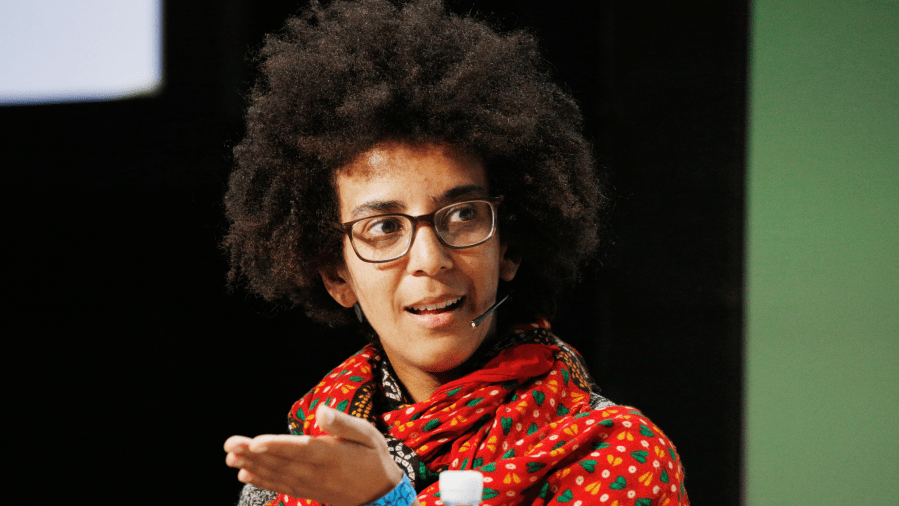

Timnit Gebru envisions a future for smart, ethical AI

Artificial intelligence can certainly be used or misused for harmful or illegal purposes, even unintentionally, when human biases are baked into its very code. So, what needs to happen to make sure AI is ethical?

Timnit Gebru is the founder and executive director of the new Distributed AI Research Institute, or DAIR. She previously worked on AI at Google and Microsoft.

Gebru said one issue with current AI research is the incentives for doing it in the first place. The following is an edited transcript of our conversation.

Timnit Gebru: The two incentive structures under which most AI research is currently organized is the two funding entities. One is the Department of Defense or [the Defense Advanced Research Projects Agency], and it’s generally because they are more interested in autonomous warfare. Honestly, that’s my view. And then the second bucket is corporations. They want to figure out how they can make the most money possible. The question is, can we have a research institute that has a completely different incentive structure from the very beginning, and can we organize ourselves like that?

Kimberly Adams: Can you maybe give me some specifics about what this is going to look like in deployment to actually have research based on an alternative incentive structure?

Gebru: A lot of technology is commercialized from research. But as a research institute, that’s not necessarily our goal. So our goal is, though, to think about AI that benefits people in marginalized groups. How do we conduct that research? How do we include their voices? How do we make sure that we don’t exploit them and pay them? And my hope is, once we do that, we can arrive at actual research products that are beneficial to the community because their voice is part of it.

Adams: There are plenty of examples of how bias can creep into AI. We just had a conversation the other day about predictive policing, but are there examples you can point to of artificial intelligence that’s out there in the world right now being deployed for an ethical use?

Gebru: So one of them is my former colleagues who are at Makerere University in Uganda. [They] created an app on the phone to help small-scale cassava farmers surveil their farms using computer vision technology. So that means that they can tell whether there’s severe disease in their farms or not. And so I think this is great because this is, again, working in conjunction with small-scale farmers, not huge, you know, multinational corporations. And cassava is a very important crop for food security, and especially as you have more and more climate change and climate catastrophe.

Adams: What is going to be different about doing this kind of research outside of a big tech firm, as opposed to within these companies, where you might have better access to what’s going on?

Gebru: If my goal is to work with people [in] marginalized groups, and I want to understand what issues they’re having and what ideas they have for how to work in technology that might benefit them, I should spend more time with that group of people rather than the people in the corporations. The people in the corporations will tell me about what issues they’re having in terms of the products that they want to build, but that’s not necessarily rooted in the needs of people in the groups that I’m hoping to work with. The other thing that’s going to be different is that the incentive structure is going to be different. So someone’s not going to ask me, how are you going to make money from this? Or how is this going to be benefiting Google? On the flip side, if I identify something or a technology that has proliferated as sort of like we did, before I got fired, my collaborators and I wrote a paper about what’s called large language models, a type of language technology that is being used by literally everybody, all large corporations. When we identified the issues and synthesized them in a scientific paper, they freaked out and I got fired, so I am hoping that that’s not going to happen at my own research institute, right? So I think that we will have a little bit more freedom to discuss the root of the harms of these technologies.

Adams: You did leave Google in a very public way. You say that you were fired, the company claims that you resigned. How has that whole experience shaped the way that you’re approaching AI research more broadly?

Gebru: That’s a very good question. I think that entire experience of being fired from Google, because I certainly was fired, has strengthened my resolve, to be honest with you, to fight the power that these multinational corporations have. I, now more than ever, believe that this issue of bias in AI is not just a technical issue. So, when you’re in these corporations, they want people to believe that it’s a technical issue. You have to use large data sets and there’s issues that come up with that and it’s a technical thing that you have to solve, but I truly believe that the root of it is the power that these multinational corporations have and the lack of worker power. So I really believe people should not lose health care the moment they are fired from their jobs because if people don’t have these kinds of rights, whenever you have someone on the inside who identifies harm, they’re going to be treated worse than I am, right? Like there are a lot of people who’ve been treated worse. So we really need to address the root of these issues and stop talking about it as a merely technical problem devoid of the societal context.

Adams: Here in Washington, where I am, there’s a lot of lawmakers who are pushing for updated regulations for the tech industry, including regulating AI. What do you think of what you’ve seen so far in those efforts, and what role do you think the federal government should play in regulating artificial intelligence or tech companies in general?

Gebru: There needs to be regulation that specifically says that corporations need to show that their technologies are not harmful before they deploy them. The onus has to be on them, and then proactively, instead of concentrating powers on the hands of a few groups of people in Silicon Valley, if you look at Silicon Valley, there’s like four or five people that have all the money and fund all the companies. We each have alternatives, right? We can have, for instance, states that fund certain startups, certain groups of people from low-income communities, etc. This type of investment might not seem like it’s related to AI, but it absolutely is, because these are the groups of people who were negatively impacted by AI and surveillance, etc.

Related links: More insight from Kimberly Adams

We have a link to the DAIR website here on our own site.

One project Gebru mentioned she’s excited about is research that uses aerial images of neighborhoods in South Africa to trace the history of apartheid in the country.

Another example of AI for good that she mentioned is featured in a story from Wired UK about a group in New Zealand who pioneered speech-recognition technology for the Māori language, and they’re now trying to prevent that data from being taken over by big tech firms.

The future of this podcast starts with you.

Every day, the “Marketplace Tech” team demystifies the digital economy with stories that explore more than just Big Tech. We’re committed to covering topics that matter to you and the world around us, diving deep into how technology intersects with climate change, inequity, and disinformation.

As part of a nonprofit newsroom, we’re counting on listeners like you to keep this public service paywall-free and available to all.

Support “Marketplace Tech” in any amount today and become a partner in our mission.