Can artificial intelligence identify guns fast enough to stop violence?

Chris Ciabarra is a serial tech entrepreneur, but on this particular day in Austin, Texas, he moves with the frenetic energy of a film producer on a schedule, giving rapid-fire instructions to a group of actors.

“James, ready? Outside,” said Ciabarra to James Dagger, who’s wearing a face mask. “As soon as he walks in the room, everyone run in all different directions and get out of the room. Is that clear?”

Dagger holds a very real-looking fake gun and enters the room suddenly. He yells, “Get down on the ground!” and the rest of the group scatters in different directions. The whole things takes about 20 seconds, max. It looks like it’s really happening. And that’s because it’s meant to look real.

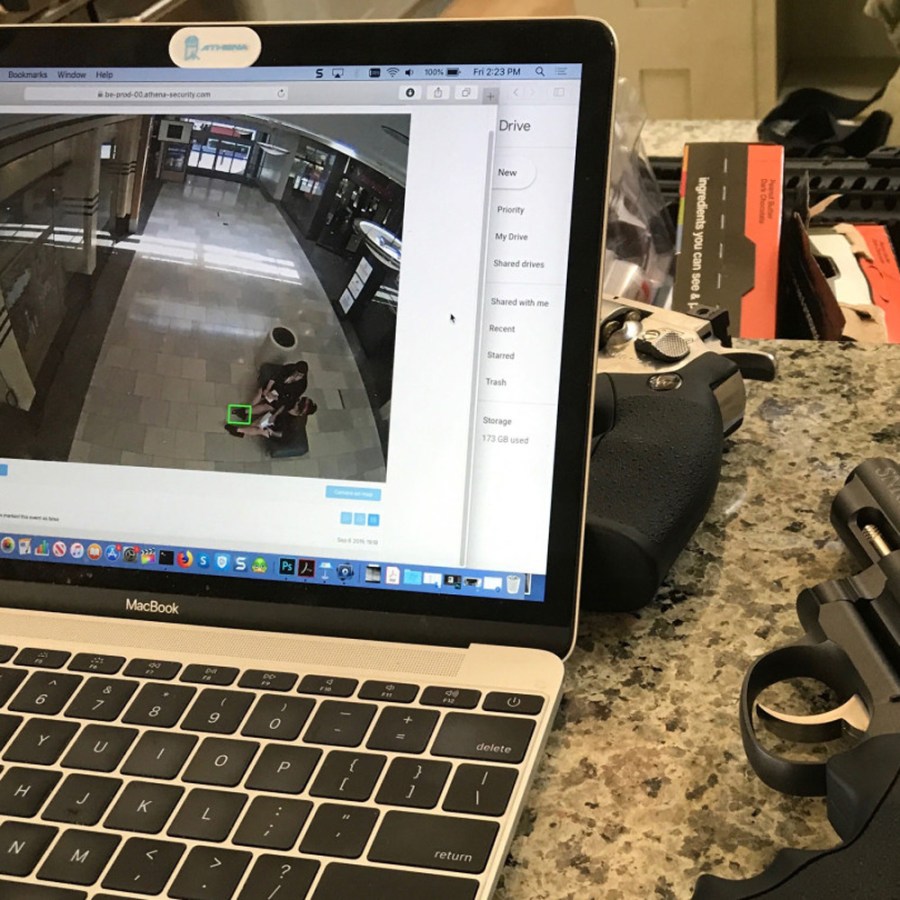

This is a film shoot held by Athena Security. It’s one of several companies creating artificial intelligence software to identify weapons. AI requires a lot of data to learn what is a gun — and what isn’t. But there aren’t enough images in the public domain of people holding weapons, and very few are from the perspective of security cameras. So Athena is creating its own data by filming the footage itself.

At the shoot, Dagger enters the room again, this time without a face mask. Then again, while wearing a hat. The idea is to get as many variations of the scene as possible so that the AI can still identify the gun, no matter what the person holding it looks like.

Athena is a startup, so it’s a film production on a budget: The company advertised for actors on Craigslist, and the group is working out of Ciabarra’s home in northwest Austin. Ciabarra is the chief technology officer and co-founder of the company. He said when it started, he looked at all sources of media, including professional films, but found it just wasn’t working.

“We started with all the Hollywood videos, movies. We found out [that] they’re shooting always straight on, never shooting top down,” Ciabarra said. Most security cameras are positioned 10 to 12 feet high.

There have been more than 350 mass shootings in the United States this year, according to the nonprofit Gun Violence Archive. The most deadly of those occurred in places that are accessible to the public: a shopping center in El Paso, a municipal building in Virginia Beach, outside a bar in Dayton, Ohio.

Athena sees public places with security cameras as potential customers. Their clients include malls, churches and schools. The company doesn’t make the cameras itself, but sells software that taps into the existing network. It charges a hardware installation fee of $400 per camera, followed by a regular monthly fee of $100 per camera.

One of Athena’s clients is Archbishop Wood High School, in Bucks County, Pennsylvania, about an hour north of Philadelphia. The school learned about the software from one of its retired teachers, who happens to be Ciabarra’s mother. (Ciabarra is also a donor — he gave an $85,000 gift for a college and career counseling center named for his mom.)

“Most parents today, when they’re looking at schools, are looking for academics and a safe environment,” said Gary Zimmaro, president of Archbishop Wood. The school costs $9,400 a year to attend, and Zimmaro said good security can give it a competitive edge.

“We are surrounded here with very good public school districts,” Zimmaro said. “Part of our job is to make sure that we’re competitive and compliant with the same thing that they’re doing. And actually, they don’t have Athena. We do.”

A high-functioning AI system could work well as a kind of add-on to a traditional security network, according to Michael Littman, a Brown University computer science professor.

“If the system’s deployed well, then maybe what it could do is allow the same number of security agents to cover a wider area,” Littman said.

Athena wants to take this security a step further. Ciabarra said customers are asking for a way to respond if the software spots a person with a weapon.

“People ask me, ‘What can I do now? I called the police earlier, that’s great, [but] what’s going to save lives? I need something quicker,’” Ciabarra said. “And now I have an answer for that.”

His answer is a robot, which the company is developing. Clients can deploy it if the software spots someone with a weapon.

“It’s going to have a Taser gun on it,” Ciabarra said. “And it’s going to have gas on it so it can intercept the person and it can take the person out.”

There are already some police and security robots in use around the world, ranging from surveillance robots to prison guards to a lethal remote-controlled rover in use in Israel. But there don’t seem to be any laws regulating robots using nonlethal force. Ryan Calo, law professor at the University of Washington, said we’re not likely to see any legislation from Congress.

“In order for something to be a federal matter, there has to be a hook,” said Calo. “What’s more likely is a municipality or a state would prohibit it.”

Athena’s software still makes mistakes. On average, twice a day, each camera identifies an object as a weapon that is actually something else. It’ll see a woman crossing her feet, clad in black shoes in a shape that looks like a gun, or a person holding a phone at a weird angle, and identify it as a weapon. That’s why the company has humans look at every image the software says is a gun.

The technology has yet to catch someone holding a weapon who intends to be violent. That’s because while these mass shootings get a lot of attention, they are pretty rare. Brown’s Michael Littman said that makes it hard to know if the software is going to perform well in real life.

“Training a system to actually recognize these dangerous situations, when you have no training data to show a real dangerous situation, means that when it actually happens, it’s kind of a crapshoot as to whether things are going to be handled correctly.”

The future of this podcast starts with you.

Every day, the “Marketplace Tech” team demystifies the digital economy with stories that explore more than just Big Tech. We’re committed to covering topics that matter to you and the world around us, diving deep into how technology intersects with climate change, inequity, and disinformation.

As part of a nonprofit newsroom, we’re counting on listeners like you to keep this public service paywall-free and available to all.

Support “Marketplace Tech” in any amount today and become a partner in our mission.