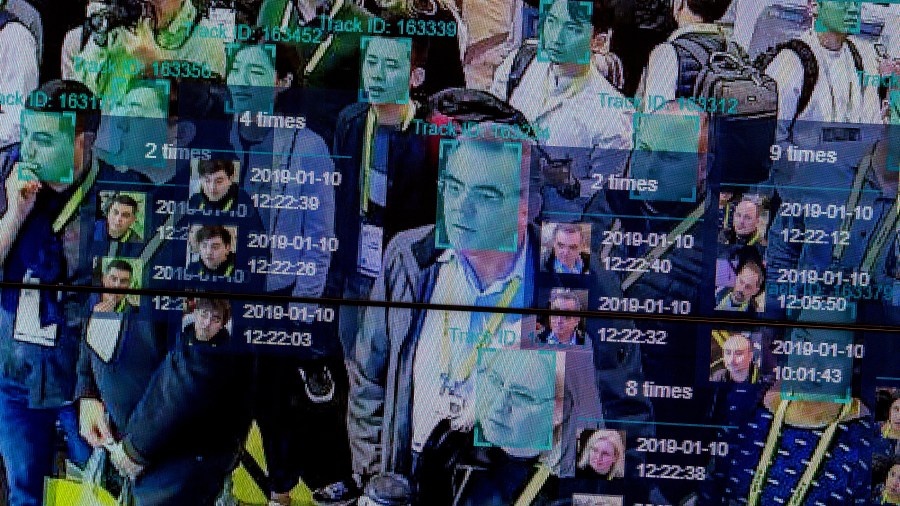

Not even the government knows the full extent of how government is using facial recognition

A new report from the Government Accountability Office (GAO) says at least 20 federal agencies are using facial recognition technology, and not just the obvious acronyms like the FBI, TSA and ICE. Agencies like the U.S. Fish and Wildlife Service, the FDA and NASA are using the tech, too.

And 13 of those agencies, more than half of those using facial recognition, don’t know what systems their employees are using or how often they use them. The agencies that do keep track say they’ve used it for identifying people at the January 6 insurrection and during last summer’s protests sparked by the murder of George Floyd. This includes the U.S. Postal Service, according to Gretta Goodwin, director of the GAO’s homeland security and justice team.

The following is an edited transcript of our conversation.

Gretta Goodwin: The Postal Inspection Service used facial recognition technology in conjunction with the civil unrest that happened last year. So, what they told us that they were looking to see, you know, whether U.S. Postal property had been damaged, whether mail had been stolen, so they use facial recognition technology to help them identify individuals that way.

Kimberly Adams: Are all of these agencies allowed to use facial recognition in the way that they’re doing it?

Goodwin: I will say it depends. And let me explain to you why. So, some of the law enforcement agencies own their own facial recognition technology systems, and they use it for things like identifying employees. Some of the other law enforcement entities use the facial recognition technology for surveillance, or for identifying anyone who might be committing a crime. We looked at two types, so we asked agencies whether they owned their own facial recognition technology, and we asked agencies whether they use another entity’s facial recognition technology. And so, some of the agencies that we surveyed use another entity’s facial recognition technology.

Adams: And you mean other government entities?

Goodwin: Not necessarily. Sometimes they would use another government entity’s facial recognition technology system, sometimes they would use a private entity’s facial recognition technology system. Some of the private entities have access to a number of different types of images that law enforcement might not have access to.

Adams: It seems like there’s concern that maybe law enforcement officials would have access to a database, say like Clearview AI, scrapes photos from social media. But these are photos that normally law enforcement couldn’t look at or use without a warrant, right?

Goodwin: That is correct. That is correct. And so, when the law enforcement agencies were making use of private companies’ facial recognition systems, such as a Clearview AI, they were accessing photos that they would not have normally been able to access because of privacy and civil liberties concerns.

Adams: How much do these agencies know about how their employees are using facial recognition systems?

Goodwin: Some of the federal agencies were not always monitoring how often or whether their employees were using the system. In our survey, 14 of the agencies told us that they use systems to support criminal investigations. But we found that only one of those 14 had awareness of which non-federal systems were being used by their employees. The U.S. Immigration and Customs Enforcement was the one that knew which non-federal systems were being used by the employees. The remaining 13 did not, and so this includes the FBI, the DEA, U.S. Customs and Border Protection, among others.

Adams: That’s kind of wild.

Goodwin: It is. And in another instance, officials from an agency initially told us that their employees did not use non-federal systems. However, after those officials conducted a poll, the agency learned that their employees had indeed used a system to conduct more than 1,000 facial recognition searches.

Adams: How well, based on what you found in your report, are these agencies adhering to existing privacy laws?

Goodwin: We didn’t go into a lot of detail in this report. The GAO issued another report earlier, in 2016. That report was mostly focused on the FBI. This report doesn’t go into a lot of the privacy and accuracy concerns, but we do raise them based on the information we know from just how the technology has been used in the past. And given that the technology is being used more and more, we believe that the privacy and accuracy concerns are something that the agencies need to be focused on.

Adams: And I’m guessing that’s all tied up in the civil liberties concerns.

Goodwin: Exactly, exactly.

Adams: There’s been a lot of research on the biases in facial recognition software, particularly in terms of how it identifies Black and brown faces. How are agencies taking that into account when they’re deciding how they use the software?

Goodwin: In recommending that the agencies do a better job of monitoring the usage of the technology, one of the reasons is because there are significant privacy and accuracy considerations. And it’s really important because there are many opportunities for the technology to be abused.

Adams: It seems like, going through this list, the recommendations are about how to use facial recognition better, not over whether to use it at all. Has that ship sailed?

Goodwin: I won’t say the ship has sailed, but I will say that if the agencies are using the technology, our recommendation will help them use it in such a way that they have insight into how it’s being used, they are complying with privacy laws and that the systems that they are using are sufficiently accurate.

Related Links: More insight from Kimberly Adams

The full report includes more detail on how agencies are using facial recognition, the GAO’s recommendations, and agency responses. And the Washington Post’s reporting on this provides some useful context, too.

Meanwhile, cities across the country have placed limits on the use of facial recognition, which is less effective on non-white faces. Some major providers of this technology have stopped working with police departments, and at least three cases of wrongful arrests stemming from facial recognition have led to lawsuits.

The Verge has a piece on a Maine law passed just this week, which the site calls, “the strongest state facial recognition ban yet.” It basically prohibits any law enforcement agency from using it except in very specific circumstances.

Looking outside the U.S., a Reuters story details how Europe’s two privacy watchdogs are calling for a ban on the use of facial recognition in public spaces, which would run counter to draft European Union rules that would allow it for public security reasons.

The future of this podcast starts with you.

Every day, the “Marketplace Tech” team demystifies the digital economy with stories that explore more than just Big Tech. We’re committed to covering topics that matter to you and the world around us, diving deep into how technology intersects with climate change, inequity, and disinformation.

As part of a nonprofit newsroom, we’re counting on listeners like you to keep this public service paywall-free and available to all.

Support “Marketplace Tech” in any amount today and become a partner in our mission.