A new digital tool that can help people in abusive relationships

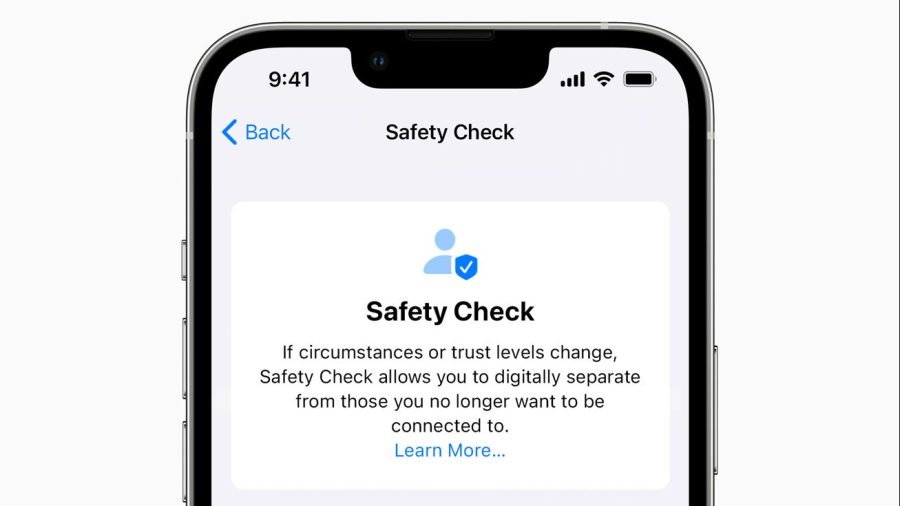

Apple’s latest operating system, iOS 16, includes a new feature, Safety Check. It’s a resource designed to give people in abusive relationships better ability to control — or regain control of — their privacy and communications.

Many criticized Apple after the rollout of its AirTag technology last year, warning it was being used to aid abusers in tracking and stalking their targets.

Marketplace’s Kimberly Adams speaks with Erica Olsen, director of the Safety Net Project at the National Network to End Domestic Violence, about how Apple’s Safety Check works. The following is an edited transcript of their conversation.

Erica Olsen: It can allow somebody to completely do an emergency reset. And that would immediately disconnect all sharing, it would stop sharing location, it would reset privacy permissions, it protects access to messages. If the abuser had previous access to somebody’s iCloud7 to their device itself, and there was existing sharing happening with those accounts and devices, then they can use that feature to immediately disconnect and stop the person from having any of that access.

Kimberly Adams: Your organization, among others, helped advise Apple when they were creating the Safety Check feature. Where do you feel were your successes, and where do you feel like Apple could do more when it comes to this product and other safety features?

Olsen: We had several conversations about both the design and the functionality of Safety Check. And we were pleased that a lot of our input around the language, the building-in of resources and information and tips for survivors was included and built into the app so that survivors are able to go through this function with ease and also get some information along the way. There is a built-in exit, safety exit feature. Those are the kinds of things that we talked about in the design phase.

Adams: Can you walk me through how you imagine someone using the Safety Check feature if they are in danger?

Olsen: There’s often a conversation about when to do something like this, when to cut off access, because for some survivors, it might not be the safest thing to do that immediately because abusers can react and may escalate their tactics of abuse, if they’ve lost some form of control. And for some survivors, they’re going to start at that place of using the option and the functionality within Safety Check to assess who has access and where they’re sharing their information, and kind of go through that on an individual basis. And other people may be in the stage where they are trying to escape and get away from somebody and immediately cut all ties.

Adams: It feels like every time there’s a cool new technology, someone finds a way to ruin it. And that seems to have happened with AirTags. What was wrong there?

Olsen: Yeah, that’s a good summary. We do see a lot of situations where abusers are misusing a technology that was never designed for that purpose. It was designed to help people do something, communicate better, or in the case of AirTags, find their lost items and things. But, of course, abusers can get really creative, unfortunately, and they will misuse for truly anything that they can manipulate and in include as a tactic of the abuse to basically extend the monitoring and the stalking and the harassment that they are trying to perpetrate. And with AirTags, it’s something that people have found they could misuse as a tool to track somebody’s location without their knowledge. And, of course, there are some safeguards, but people are still attempting to misuse them.

Adams: How, moving forward, as we move more into the space of internet of things, are you thinking about your strategy to help people who are in these intimate abusive relationships?

Olsen: So the ways that information is gathered, collected, and then available online that we are not even interacting with. The existence of data brokers, for example, that is a consistent concern. The internet of things, that is an evolving space that we are looking at. So there’s a lot of technology that includes the sharing of accounts, and then also the sharing of information, that we are focused on.

Related links: More insight from Kimberly Adams

If you or someone you know is in an abusive relationship you can call the National Domestic Violence Hotline at 1-800-799-7233.

Erica’s organization also has a list of other hotlines, from groups like the National Sexual Assault Hotline, the National Teen Dating Abuse Helpline and the StrongHearts Native Helpline.

The Safety Net Project Erica runs has a whole toolkit for victims and survivors of domestic violence. It includes a Technology Safety Plan, which encourages victims to connect with resources like hotlines or information about safehouses using a device the abuser can’t access, like a friend’s cellphone or even a library’s computer.

I mentioned at the top about the controversy over Apple’s AirTags. The Verge had a piece on that back in March, which details how AirTags can be used by abusers and other criminals, as well as what users and Apple can do to make interactions with AirTags safer.

The future of this podcast starts with you.

Every day, the “Marketplace Tech” team demystifies the digital economy with stories that explore more than just Big Tech. We’re committed to covering topics that matter to you and the world around us, diving deep into how technology intersects with climate change, inequity, and disinformation.

As part of a nonprofit newsroom, we’re counting on listeners like you to keep this public service paywall-free and available to all.

Support “Marketplace Tech” in any amount today and become a partner in our mission.