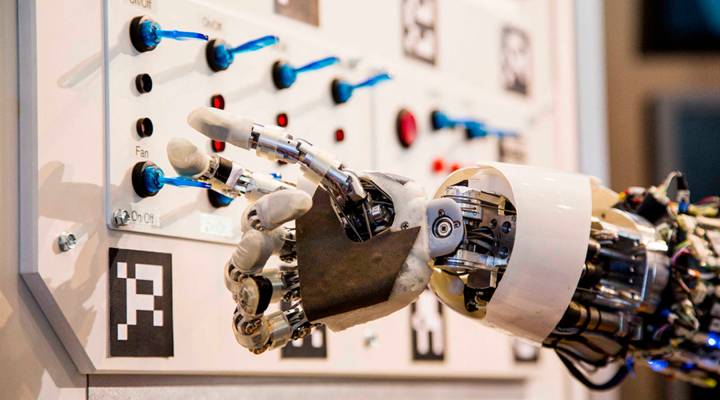

Will AI eventually let us enhance our own brains?

Will AI eventually let us enhance our own brains?

Machines are getting stronger and smarter. They may soon be our competitors and colleagues in the workforce. What would happen if we used them to enhance our own brains? Nick Bostrom is the director of the Future of Humanity Institute at the University of Oxford, and author of “Superintelligence: Paths, Dangers, Strategies.” Bostrom sat down with Molly Wood to talk about what would happen if artificial and human intelligence meet. Below is an edited transcript.

Molly Wood: Do you think artificial intelligence will eventually let us enhance our own brains?

Nick Bostrom: So there are a number of possible routes that could lead to superintelligence and a form of general intelligence that vastly outstrips current human intelligence. You could imagine enhancements to our biological cognitive capacities through genetic selection or genetic engineering or some other method. Or you could imagine artificial intelligence getting there first. And to some extent it’s an open question, whether the biotech route or the AI route will first bring us superintelligence. Ultimately though I think machine intelligence just has a vastly higher potential. They are fundamental limits to information processing in biological tissue such as our brains.

Wood: You have argued that when we talk about artificial intelligence that we humans are playing with a bomb. There are plenty of good business cases being made for an increase in the abilities of artificial intelligence. Do you think that’s something that’s likely, the economic push is something that’s likely to set that bomb off sooner?

Bostrom: Certainly. I mean there are a lot of reasons for pushing forward with AI research. One of them increasingly is the commercial relevance of this technology. There has also for a long time been scientific curiosity driving this forward and spillover from other scientific disciplines like statistics, computer science, neuroscience. But for sure now the forces driving this forward are very strong and getting stronger.

Wood: People really disagree about the timeline and, of course, on some level, the threat. You’ve argued we’re playing with a bomb, Elon Musk and other technologists agree. Then, there are people like Andrew Ng and engineers doing AI research who are saying, look we’re hundreds or thousands of years away from such an eventuality. What do you think is the possible timeline?

| Learning your ABCs in the future: reading, writing, coding and AI |

| “Whole professions could wink out of existence,” a conversation on the future of work |

Bostrom: I can’t actually think of anyone who thinks it’s hundreds or thousands of years. I guess there are some that think it might never happen. But, we did a survey of some of the world’s leading machine learning experts and one of the questions we asked was, “when do you think there will be human-level or high-level machine intelligence?” — defined as AI that can do all jobs at least as well as a human. Well, depending on how you ask the question, usually the median answer to that is about a 50 percent chance by 2045. And, you’re right that opinions are all over the place. There’s certainly nothing like a consensus. Some people are very convinced it will happen much sooner and others are convinced it will take a lot longer. But, it’s still interesting to note that for what it’s worth, the average AI researcher thinks that there’s a very sizable chance of it happening within our lifetimes.

Wood: And would you say that machines then become competitors? Colleagues? Or is there also the possibility that we will become increasing the machine with the sort of neurolace technology that Elon Musk is talking about?

Bostrom: So how it plays out, it depends on how well we manage this transition to the machine superintelligence era. It’s not clear what the outcome will be, so maybe some of the work we are doing now, some of the research, into the AI-alignment problem could make a difference — make it more likely that we will end up with machine superintelligence that is on our side, that is sort of an extension of the human will, that’s friendly to us and safe, as opposed to some alien antagonistic force that ultimately overwhelms us.

Wood: And of course, you say, that’s assuming everything goes well. That we have a controlled detonation. What does the other scenario look like?

Bostrom: So there’s a sequence of problems. In order to get the privilege of confronting this question of meaning and employment, we first have to make sure that we solve the technical AI alignment problem, so that we don’t have some AI going off and making paper clips out of the whole planet because it happen to be misprogrammed with a goal of just wanting as many paper clips as possible. So, if we solve that technical problem, then we kind of get to confront the economic problem and if we solve that too, then maybe we get the privileged of confronting the meaning problem of what to do with the leisure that you have and the wealth that you have.

There’s a lot happening in the world. Through it all, Marketplace is here for you.

You rely on Marketplace to break down the world’s events and tell you how it affects you in a fact-based, approachable way. We rely on your financial support to keep making that possible.

Your donation today powers the independent journalism that you rely on. For just $5/month, you can help sustain Marketplace so we can keep reporting on the things that matter to you.